firstworkdate Qlik Equivalent in Spark SQL

Hi There!

I was chatting with a friend and he was facing a problem on a migration project.

The old script and processes was built with Qlik, I have never heard of it until now. There the script was using a called a function called as

firstworkdate

The firstworkdate function returns the latest starting date to achieve no_of_workdays (Monday-Friday) ending no later than end_date taking into account any optionally listed holidays. end_date and holiday should be valid dates or timestamps.

Here I have excluded the holiday part though. Please suggest if you have anything in mind to implement it.

I still think its a very lame solution though but it works. 😀

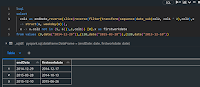

Here is a proposed solution:

My approach was is to get all the dates twice as length the supplied date. It can be optimized though.

Then I am using the transform function to calculate weekday for each date, basically i am creating a struct out of the sequence of dates.

Then I am filtering the dates excluding the Saturdays and Sundays. Then I am reversing it and slicing it based on the required no of days specified. Then I am again reversing the array and getting the date for first element.

Let me know what do you think on this.

Thanks for reading!!

Comments

Post a Comment